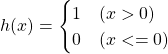

Step function

The step function is simple, it gets only two values zero or one no matter what the input value is.

#!/usr/bin/env python

import numpy as np

import matplotlib.pylab as plt

def step_function(x):

return np.array(x>0, dtype=np.int)

if __name__ == '__main__':

x = np.arange( -5, 5, 0.1 )

y = step_function( x )

plt.plot( x, y )

plt.grid()

plt.ylim( -0.1, 1.1 )

plt.show()

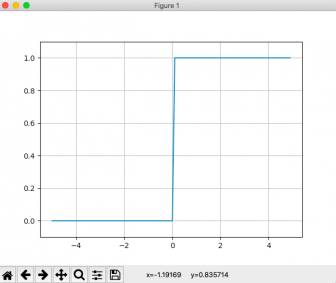

Sigmoid

Sigmoid function expression:

![]()

Draw it by python.

#!/usr/bin/env python

import numpy as np

import matplotlib.pylab as plt

def sigmoid( x ):

return 1/(1+np.exp(-x))

if __name__ == '__main__':

x = np.arange( -10, 10, 0.1 )

y = sigmoid( x )

plt.plot( x, y )

plt.ylim( -0.1, 1.1 ) # range of y value

plt.grid()

plt.show()

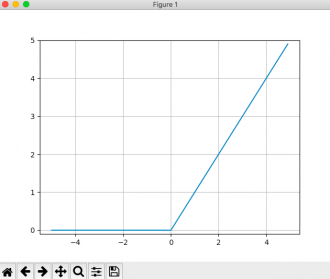

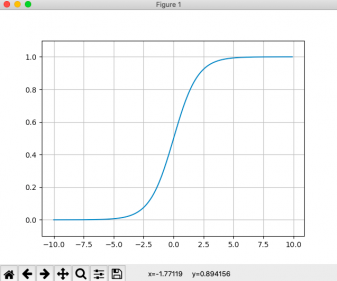

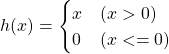

ReLU

Rectified linear unit (ReLU) function became popular in neural network algorithm. It has the following calculation expression.

Draw it by python.

#!/usr/bin/env python

import numpy as np

import matplotlib.pylab as plt

def ReLU( x ):

return np.maximum( 0, x );

if __name__ == '__main__':

x = np.arange( -5, 5, 0.1 )

y = ReLU( x )

plt.plot( x, y )

plt.grid()

plt.ylim( -0.1, 5 )

plt.show()